I was given the task of designing and implementing several of the pod racers that were used in Kinect StarWars.

This was one of the most exciting sound design challenges I’d been given but I wasn’t entirely sure where to begin at first. If you go back and watch the original pod race scene from Episode 1, a vast majority of the amazing sound design happens in hundreds of deceptively brief moments; quick pass-bys, slivers of engine sounds, a line or two of character dialog, and lots of camera positions. The goal was to maintain the original feel of the pods but translate it over to the non-linear format of a game where the player would have to stay with those sounds in a single perspective for several minutes at a time.

After a lot of experimenting, I realized the trick would be in all the details. Instead of having a handful of great engine loops, we were going to need dozens upon dozens of constituent sounds that when gelled together in Wwise, would create a cohesive whole. While there are engine loops for each pod, there are also several one shots like accelerations, brakes, turbo boosts, gear shifts, idles, banking sounds, jumps, damaged engine sounds, impacts and turns. Figuring for variations of each, I’d estimate each pod had roughly thirty discrete elements to it.

Once that list was made, I started designing them. I knew Ben Burtt had used a lot of engine sounds, so I began there. In between library material and field recording, I got a nice collection of Formula 1 cars, boat engines, car noises, motorcycles and other vehicle material.

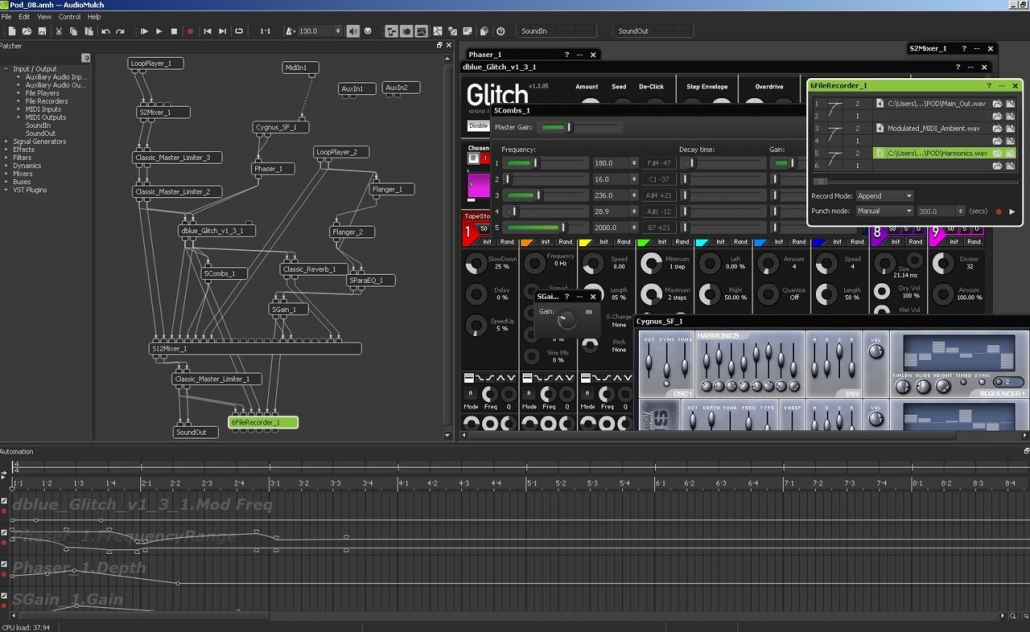

Molding everything together took a lot of trial and error, but I eventually came to rely on three primary tools in addition to my DAW: Reason, GRM tools and my all time favorite sound design software, AudioMulch – a modular sound processing program that will let you do unimaginably “wrong” things that more traditional DAWS won’t.

Here’s a typical AudioMulch patch from when I was making the pods:

I also used Reason which was invaluable in that it allowed me to easily import sounds into the NN-XT sampler and play with them until they sat right with the other elements. I also relied a lot on synthesis modules like Thor to find higher end harmonics that when placed in the mix would make everything sound fuller and slightly more StarWars-ish without competing against the low-end growl of the engines.

The final game had about 17 pods including Anakin’s, Sebulba’s and several AI controlled ones. Below is a small set of the various sounds I made using these methods: accelerations, gear shifts, turbo boosts, engine loops, modulations and sweeteners:

You can visit the soundcloud page here.

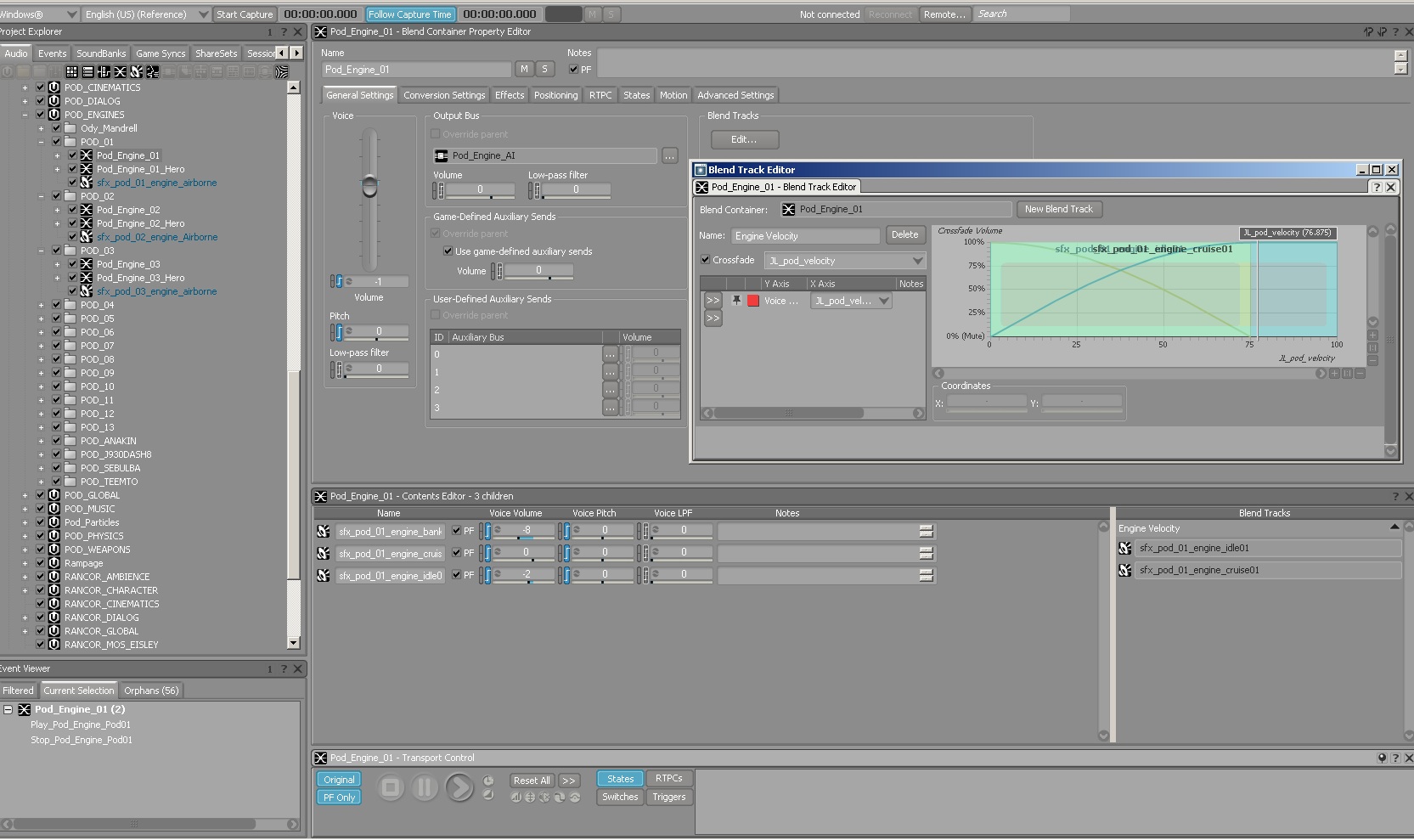

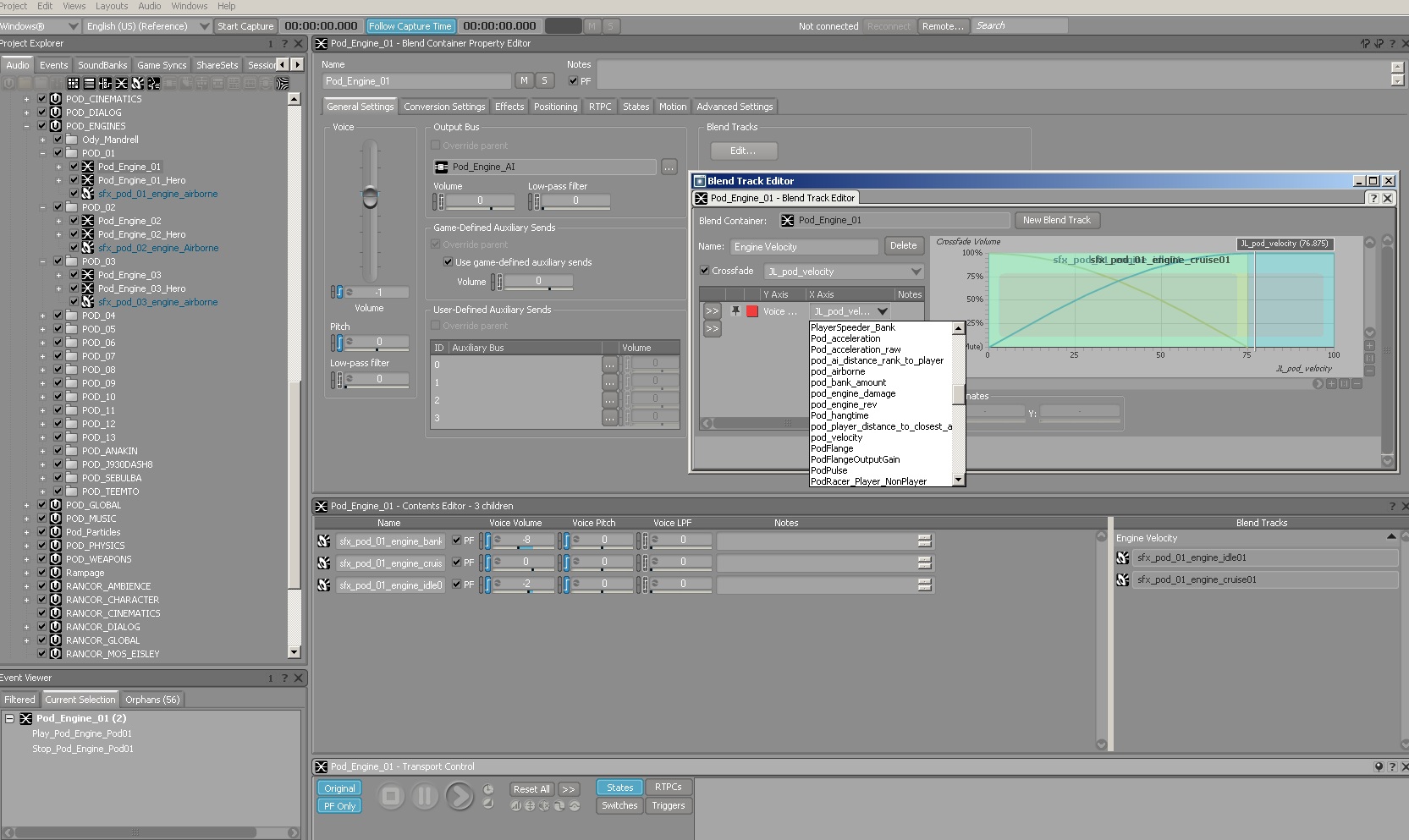

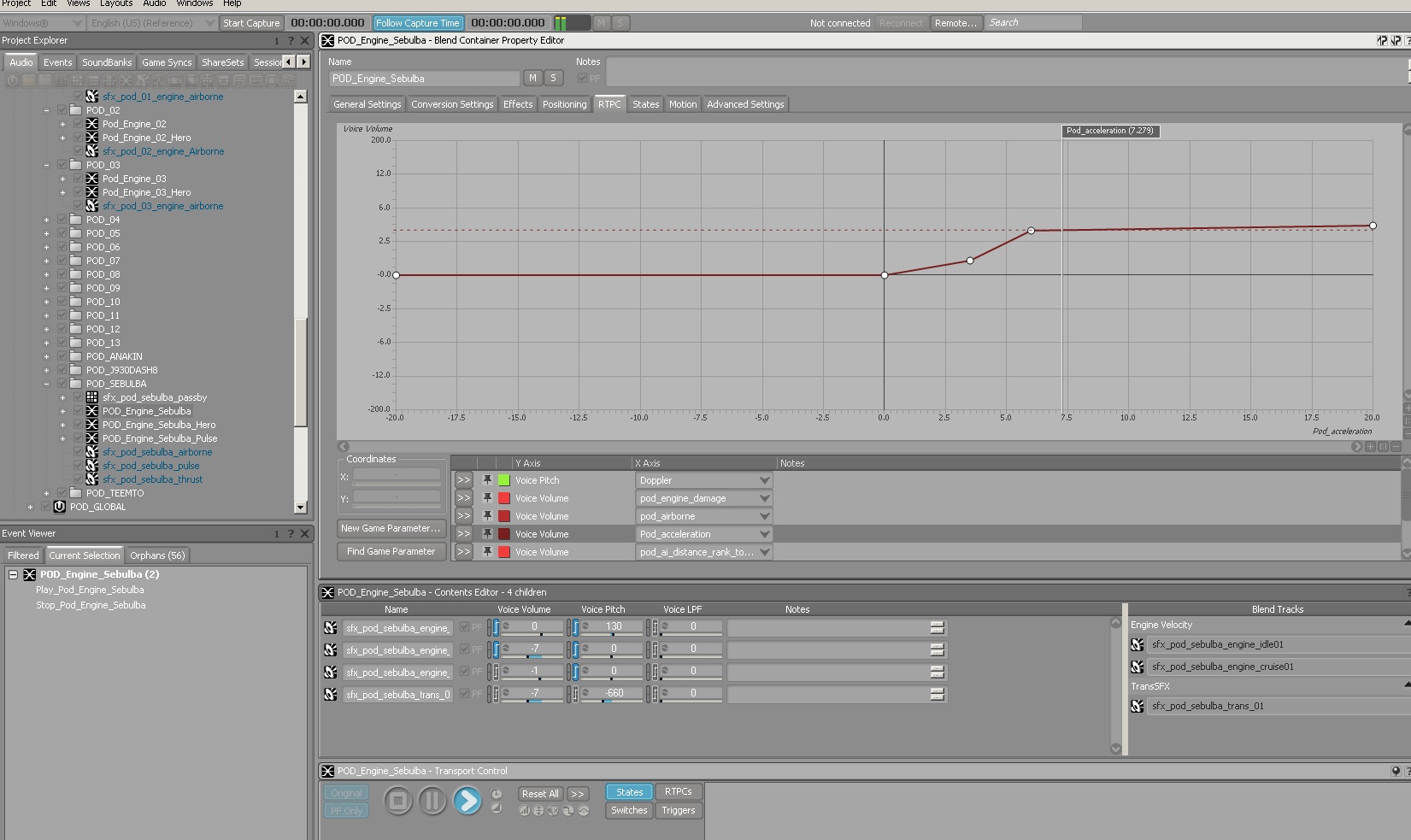

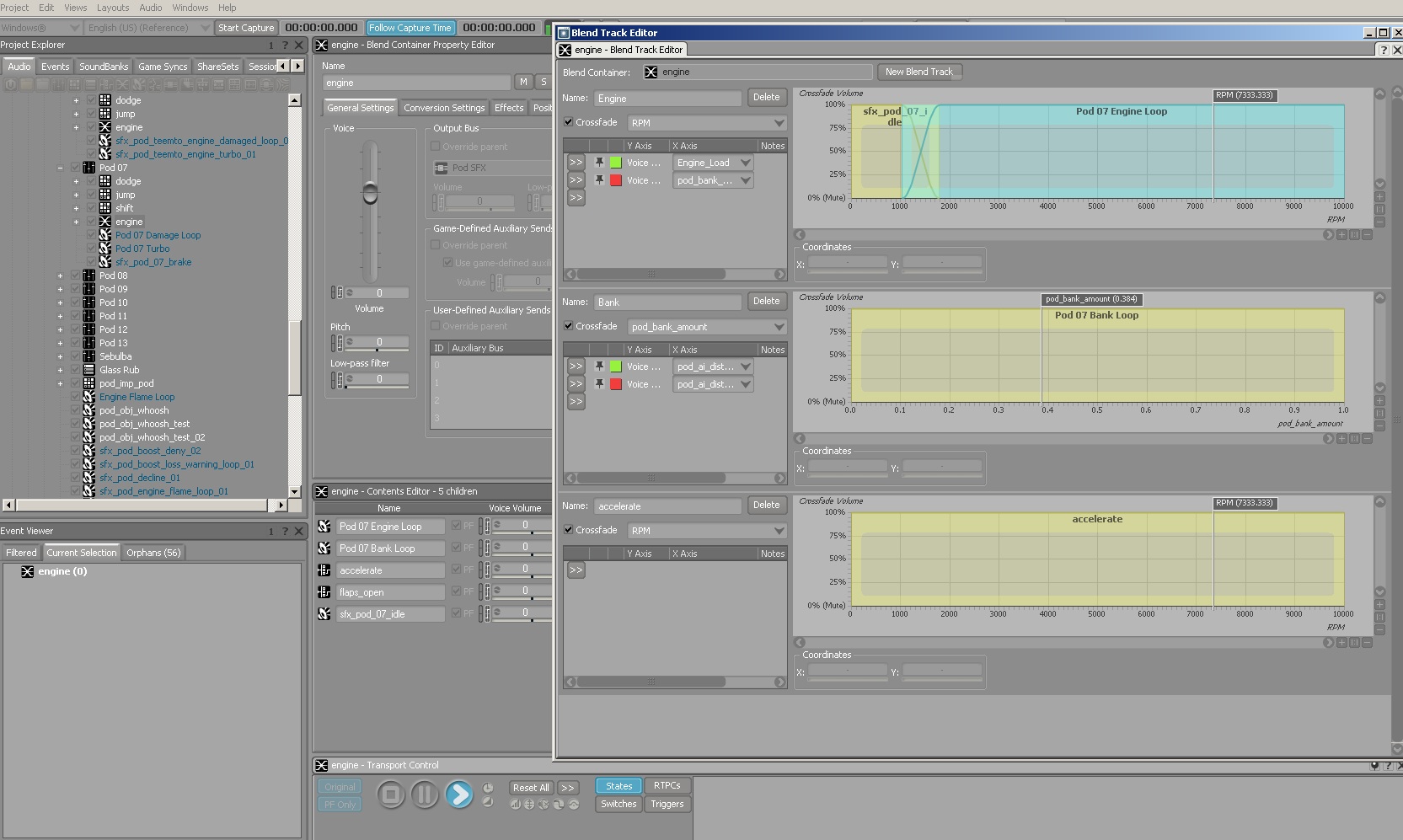

Getting everything into Wwise and hooked up correctly also took a lot of experimenting. We spent days talking with programmers and looking at the physics of the underlying code – velocity, acceleration, braking, doppler effects, banking, collision, etc. We also had to consider mixing elements; which sounds to emphasize and when. This required an entire system based around detecting the proximity of the player’s pod to the surrounding AI pods and thinking of how all the sounds should be relatively scaled in terms of mix priority. Most of the physics and proximity tracking became RTPC game parameters that were used to drive the fundamental Wwise system – EQ, attenuation curves and blend tracks.

Here are some images of the final Wwise system that was created. It shows a few of the RTPC’s, blend tracks and general layout. (These are high-res images, so click the expand button in the top right hand corner to see them correctly):

Lastly, here is a brief video showing how everything came together in the final game. You can hear all the various elements – modulations, gears and engines mixed together and being adjusted in real time. Also, while I did not write about it here, you’ll hear dialog, droids, power ups and environmental sounds – several of which I created and helped implement as well: